Featured in:

IEEE Sensors Journal

Authors:

Bo Jin, Leandro Cruz and Nuno Gonçalves

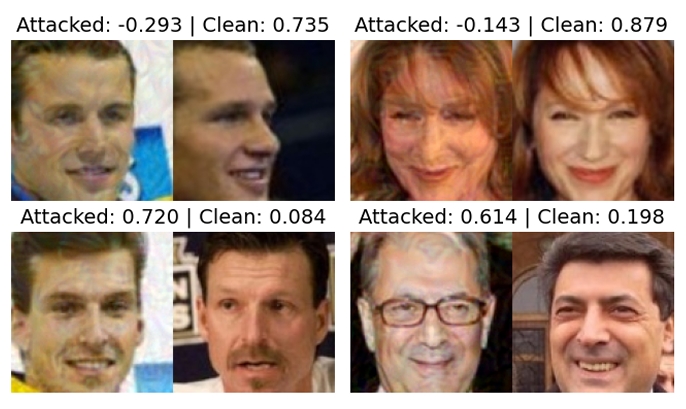

In the last decade, advances and popularity of low-cost RGB-D sensors have enabled us to acquire depth information of objects. Consequently, researchers began to solve face recognition problems by capturing RGB-D face images using these sensors. Until now, it is not easy to acquire the depth of human faces because of limitations imposed by privacy policies, and RGB face images are still more common. Therefore, obtaining the depth map directly from the corresponding RGB image could be helpful to improve the performance of subsequent face processing tasks, such as face recognition. Intelligent creatures can use a large amount of experience to obtain 3D spatial information only from 2D plane scenes. It is machine learning methodology, which is to solve such problems, that can teach computers to generate correct answers by training. To replace the depth sensors by generated pseudo-depth maps, in this article, we propose a pseudo RGB-D face recognition framework and provide data-driven ways to generate the depth maps from 2D face images. Specially we design and implement a generative adversarial network model named “D+GAN” to perform the multiconditional image-to-image translation with face attributes. By this means, we validate the pseudo RGB-D face recognition with experiments on various datasets. With the cooperation of image fusion technologies, especially non-subsampled shearlet transform (NSST), the accuracy of face recognition has been significantly improved.

© 2024 VISTeam | Made by Black Monster Media

Institute of Systems and Robotics Department of Electrical and Computers Engineering University of Coimbra