Featured in:

MD Thesis

Authors:

Tiago Dias

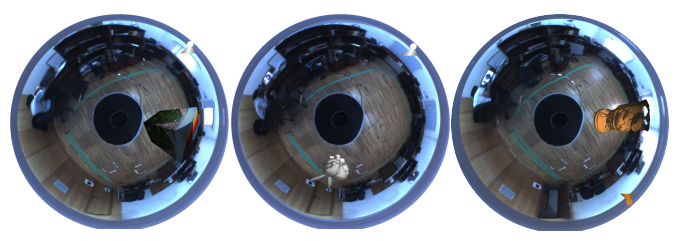

In this dissertation it is proposed a framework for the use of augmented reality using non-central catadioptric imaging devices. Our system is composed by a non-central catadioptric imagingdevice formed by a perspective camera and a spherical mirror mounted on a Pioneer 3-DX robot. In this dissertation, our main goal is considering a 3D virtual object in the world, with known 3D coordinates, make the projection of this 3D virtual object into the 2D image plane of a non-central catadioptric imaging device. Our framework presents a solution which allows us to project texturized objects (with detailed images or single color textures) to the image in realtime, up to 20 fps (using a laptop), depending on the 3D object that will be projected. When dealing with an implementation of augmented reality, some important issues must be considered, such as: projection of 3D virtual objects to the 2D image plane, occlusions, illumination and shading. To the best of our knowledge this is the first time that this problem is addressed (all state-of-the-art methods are derived for central camera systems). Thus, since this is an unexplored subject for non-central systems, some reformulations and implementations of algorithms and metholodgies must be done to solve our problems. To make clear our approach a pipeline was made, composed by two stages: pre-processing and realtime. Each one of these stages have a sequence of steps, that must be done to preverse correct operation of our framework. The pre-processing stage contains three steps: camera calibration, 3D object triangulation and object texturization. The realtime stage is also composed by three steps: “QI projection”, occlusions and illumination. To test the robustness of our framework three distinct 3D virtual objects were used. The 3D objects used were: a parallelepiped and the Stanford bunny and the happy Buddha from the stanford repository. For each object several tests were made: using a positional light (at the top of a static robot or in an arbitrary position) pointing to the 3D object; using a moving light source using two different movements (not at the same time); using three light sources, with different colors, with different movements associated at each one pointing to the object, and using a light source, positioned at the top of the robot, in a moving robot where the source is pointing to the 3D virtual object position.

© 2024 VISTeam | Made by Black Monster Media

Institute of Systems and Robotics Department of Electrical and Computers Engineering University of Coimbra