Featured in:

PhD Thesis

Authors:

Gledson Melotti

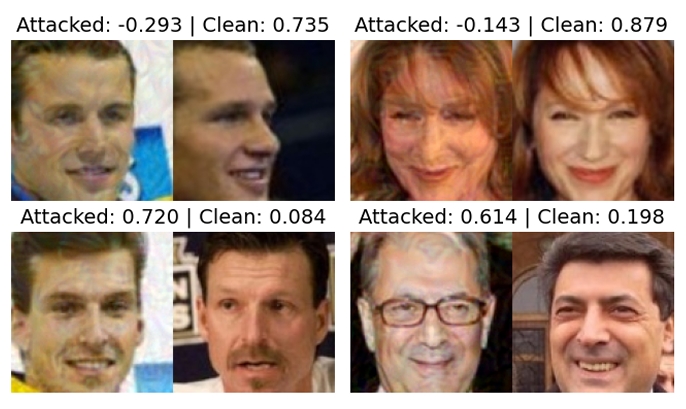

In the last recent years, machine learning techniques have occupied a great space in order to solve problems in the areas related to perception systems applied to autonomous driving and advanced driver-assistance systems, such as: road users detection, traffic signal recognition, road detection, multiple object tracking, lane detection, scene understanding. In this way, a large number of techniques have been developed to cope with problems belonging to sensory perception field. Currently, deep network is the state-of-the-art for object recognition, begin softmax and sigmoid functions as prediction layers. Such layers often produce overconfident predictions rather than proper probabilistic scores, which can thus harm the decision-making of “critical” perception systems applied in autonomous driving and robotics. Given this, we propose a probabilistic approach based on distributions calculated out of the logit layer scores

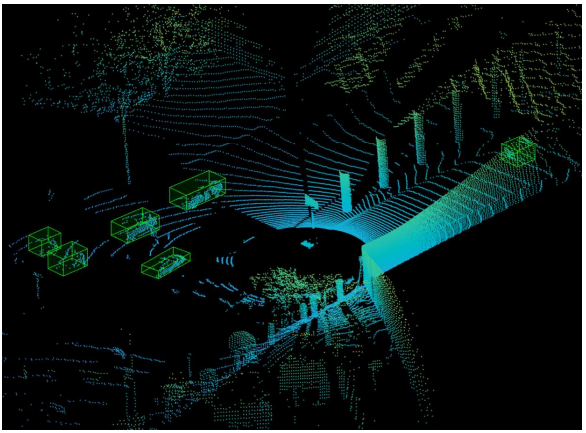

of pre-trained networks which are then used to constitute new decision layers based on Maximum Likelihood (ML) and Maximum a-Posteriori (MAP) inference. We demonstrate that the hereafter called ML and MAP functions are more suitable for probabilistic interpretations than softmax and sigmoid-based predictions for object recognition, where our approach shows promising performance compared to the usual softmax and sigmoid functions, with the benefit of enabling interpretable probabilistic predictions. Another advantage of the approach introduced in this thesis is that the so-called ML and MAP functions can be implemented in existing trained networks, that is, the approach benefits from the output of the logit layer of pre-trained networks. Thus, there is no need to carry out a new training phase since the ML and MAP functions are used in the test/prediction phase. To validate our methodology, we explored distinct sensor modalities via RGB images and LiDARs (3D point clouds, range-view and reflectance-view) data from the KITTI dataset. The range-view and reflectance-view modalities were obtained by projecting the range/reflectance data to the 2D image-plane and consequently upsampling the projected points. The results achieved by the proposed approach were presented considering the individual modalities and through the early and late fusion strategies.

© 2024 VISTeam | Made by Black Monster Media

Institute of Systems and Robotics Department of Electrical and Computers Engineering University of Coimbra