Multimodal Deep-Learning for Object Recognition Combining Camera and LIDAR Data

Featured in:

2020 IEEE International Conference on Autonomous Robot Systems and Competitions, Ponta Delgada, Portugal

Authors:

Gledson Melotti, Cristiano Premebida and Nuno Gonçalves

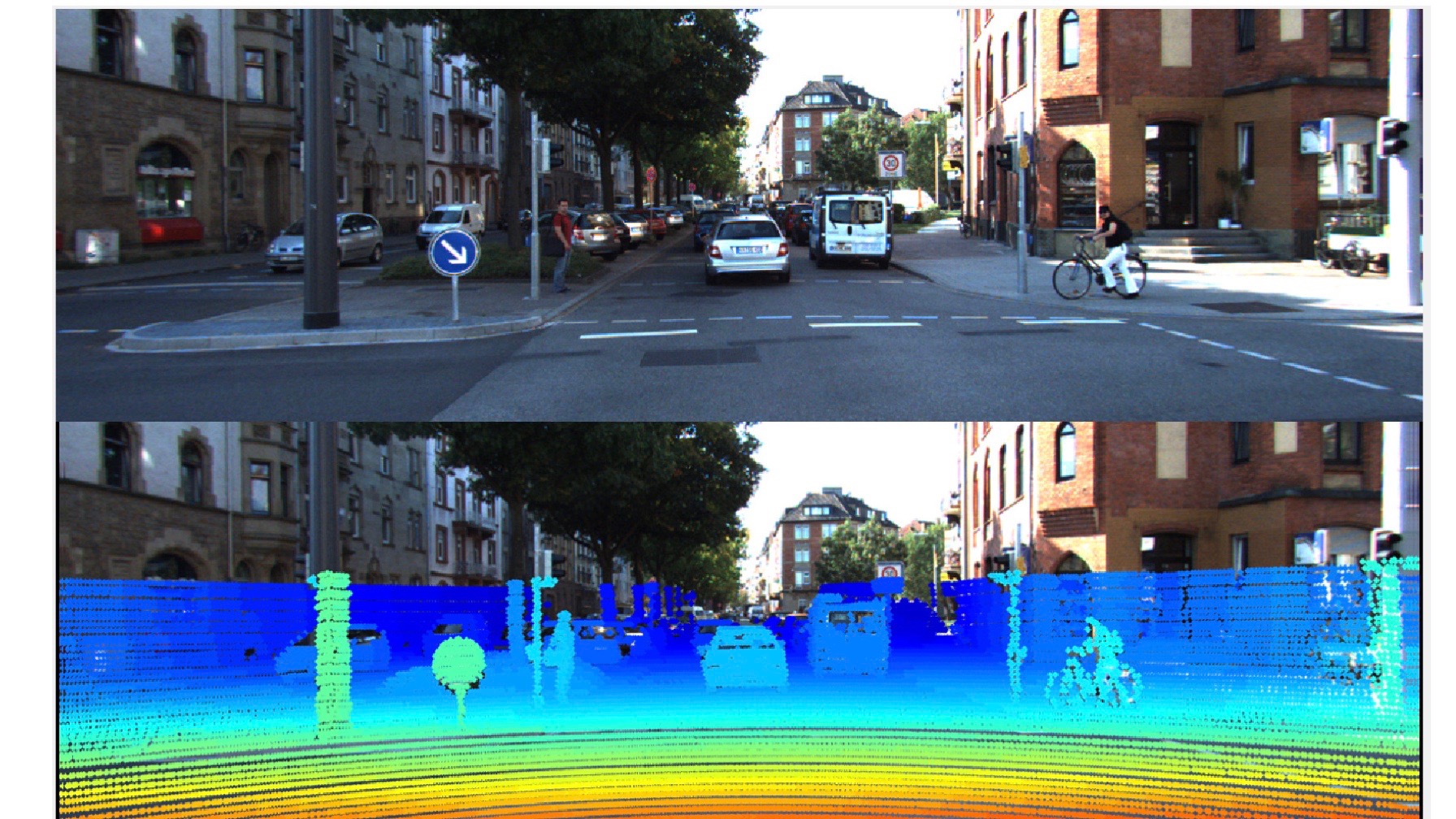

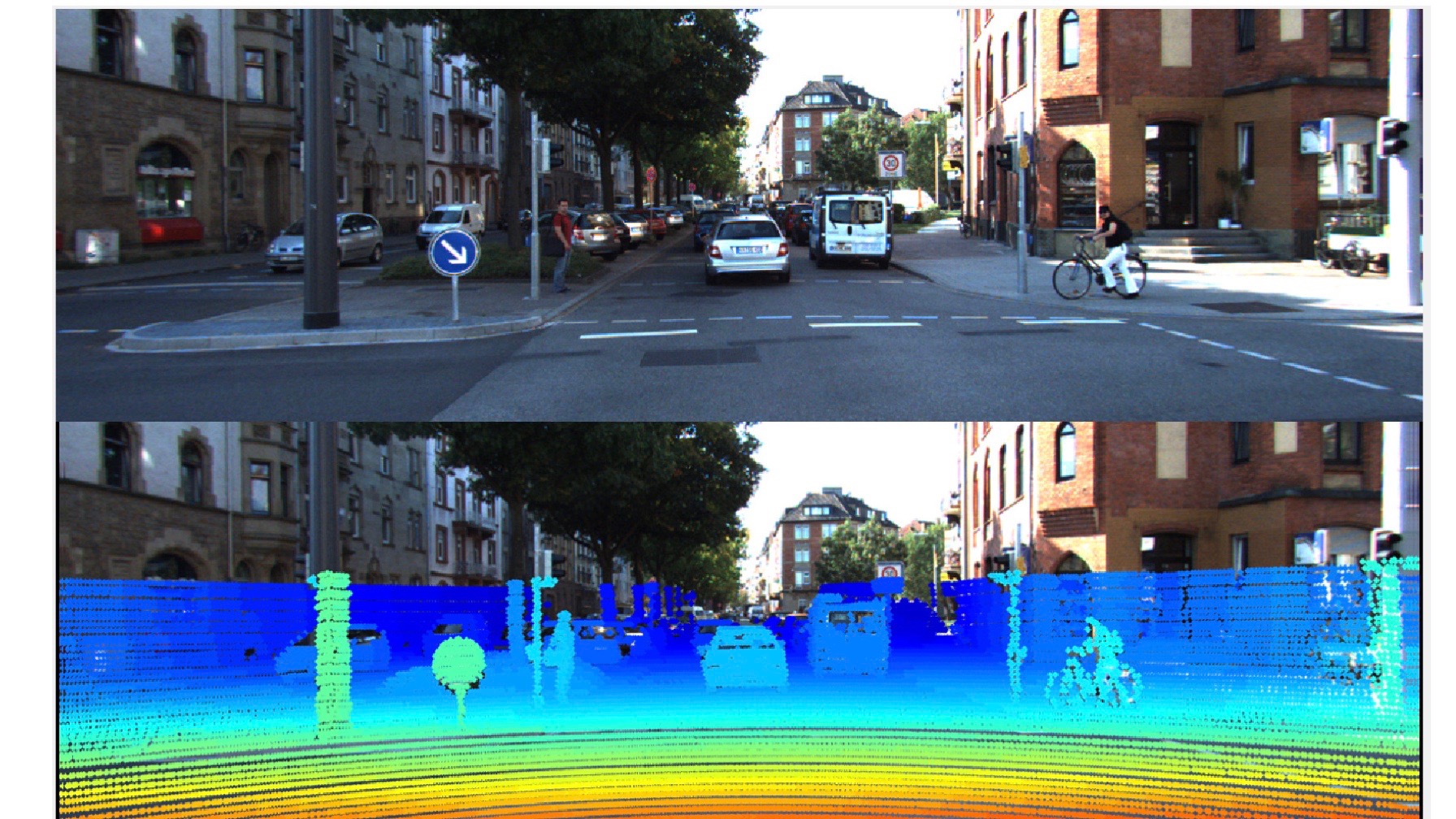

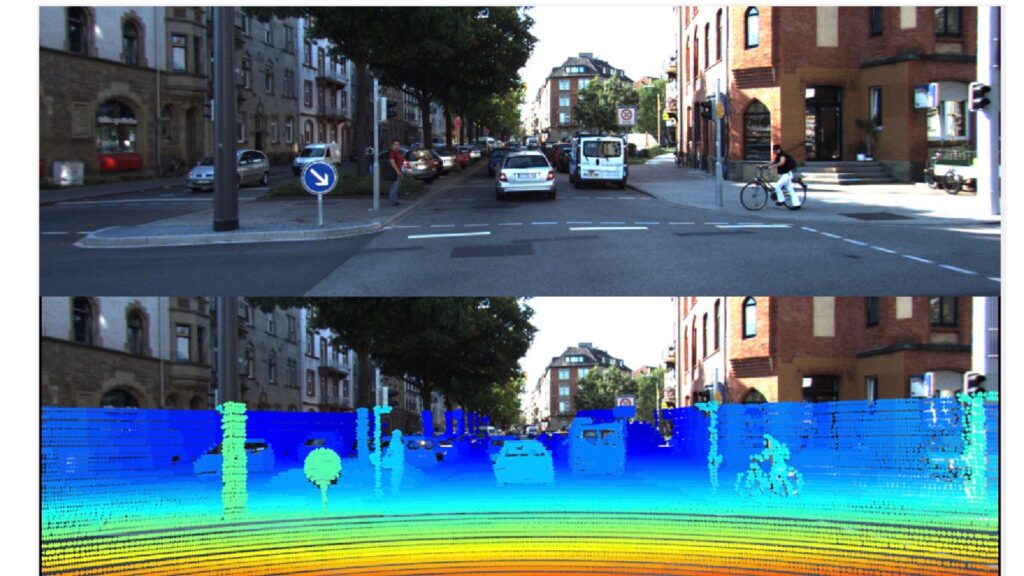

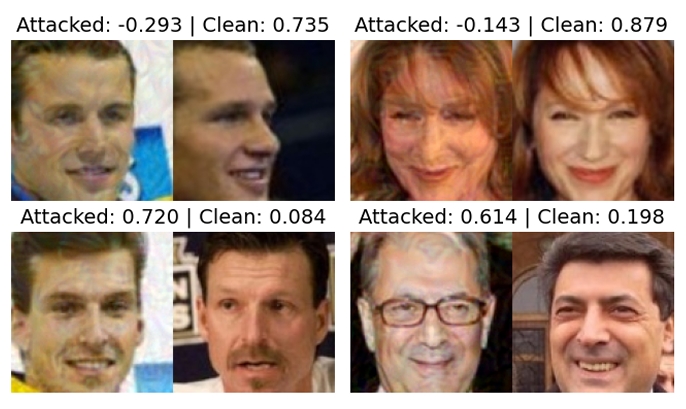

Object detection and recognition is a key component of autonomous robotic vehicles, as evidenced by the continuous efforts made by the robotic community on areas related to object detection and sensory perception systems. This paper presents a study on multisensor (camera and LIDAR) late fusion strategies for object recognition. In this work, LIDAR data is processed as 3D points and also by means of a 2D representation in the form of depth map (DM), which is obtained by projecting the LIDAR 3D point cloud into a 2D image plane followed by an upsampling strategy which generates a high-resolution 2D range view. A CNN network (Inception V3) is used as classification method on the RGB images, and on the DMs (LIDAR modality). A 3D-network (the PointNet), which directly performs classification on the 3D point clouds, is also considered in the experiments. One of the motivations of this work consists of incorporating the distance to the objects, as measured by he LIDAR, as a relevant cue to improve the classification performance. A new rangebased average weighting strategy is proposed, which considers the relationship between the deep-models performance and the distance of objects. A classification dataset, based on the KITTI database, is used to evaluate the deep-models, and to support the experimental part. We report extensive results in terms of single modality i.e., using RGB and LIDAR models individually, and late fusion multimodality approaches.

© 2024 VISTeam | Made by Black Monster Media

Institute of Systems and Robotics Department of Electrical and Computers Engineering University of Coimbra